“Validating” your startup is a big buzz word lately. Heck, all you need is five minutes and a napkin. I think the term is misused.

Validation is doing tests for which you are trying to confirm some piece of information. Exploratory tests involve doing tests for which you gain value with both failures and successes. The difference is absolutely critical for startups. Simply validating commonly known information does little to produce privileged information. The real winners discover that twist: the UI that drives adoption, the untapped enterprise market, or the untested viral growth strategy. Why waste valuable resources on proving the known?

You are approaching validation (or what I prefer to call exploration) correctly if you get negative responses (failures) about 50% of the time. In the exploratory stage the most valuable learnings come with unexpected failures and unexpected successes.

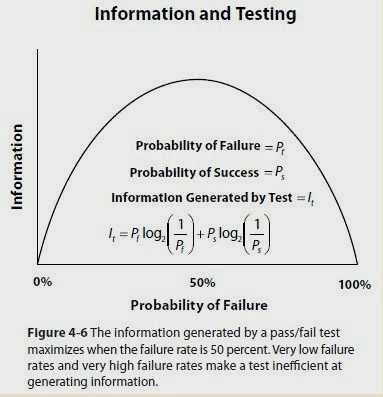

Why? In The Principles of Product Development Flow: Second Generation Lean Product Development, Donald G. Reinertsen shows the following graph to demonstrate that the average information generated by such a test is a function of its failure rate:

This should look familiar to engineers as the theoretical basis behind the binary search algorithm, but for the rest of us Reinertsen explains:

The equation in Figure 4–6 shows that the information content of a test comes from the degree of surprise associated with each possible result. Any unexpected success has high information content, as does any unexpected failure. Unfortunately, most companies do a far better job of communicating their successes than their failures. They carefully document successes, but fail to document failures. This can cause them to repeat the same failures. Repeating the same failures is waste, because it generates no new information. Only new failures generate information.The takeaway? At first, do more exploring and less validating. You are attempting to purchase information, and to maximize the value of that information you must design tests that fail as often as they succeed.

Easy Test: “Hand entering those receipts must be pretty painful huh?”

Hard Test: “Will you sign a year long contract for $22,000 if I can solve that problem for you?”

If every interview confirms your core assumption(s), then you are likely asking the wrong questions, or you are simply eliciting “known” information that is shared broadly and not particularly valuable. Like drug companies you are looking to perform tests that have unexpected results driving asymmetric payoff functions — not simply validating what is known, or failing for failure’s sake.

For example, almost everyone finds taxes, losing weight, balancing your checkbook, and home buying painful. This is why there are such large industries around solving those problems. A company like Mint didn’t need to validate that home budgeting was difficult. Rather, it needed to validate that you could disrupt with automation, bank relationships, take advantage of new bank APIs, and that there was a critical tipping point in terms of willingness to share financial data.

Some problems / pains are incredibly clear and poignant — they’ve been around for a long time — but the solution is elusive because you’ve got an “integration” problem. For example, take the music business. Everyone knew it was broken. Countless startups tried to address the problem. But it was Apple who side-stepped the integration / coordination problem.

Additionally, very few validation efforts take sufficient measures to avoid “leading the witness”. The confirmation bias is in full effect, and we likely only hear what we want to hear. We are literally “validating” ourselves vs. figuring how where there is an angle for a business.

Most pain is known. The trick is timing, understanding sea changes in terms of user habits, unraveling integration issues to force disruption, and developing faster/cheaper ways to do things. Write some scripts, stop leading the witness, and make sure your interviews are challenging your assumptions as often as they are confirming your assumptions.