I’ve been using this exercise recently, and have mentioned it at a handful of conferences.

The idea is super simple (and almost certainly not original). Very importantly, please consider the challenge of accessibility. What I’m describing here is a simple product exercise to expand your thinking. For many people, these constraints are a reality.

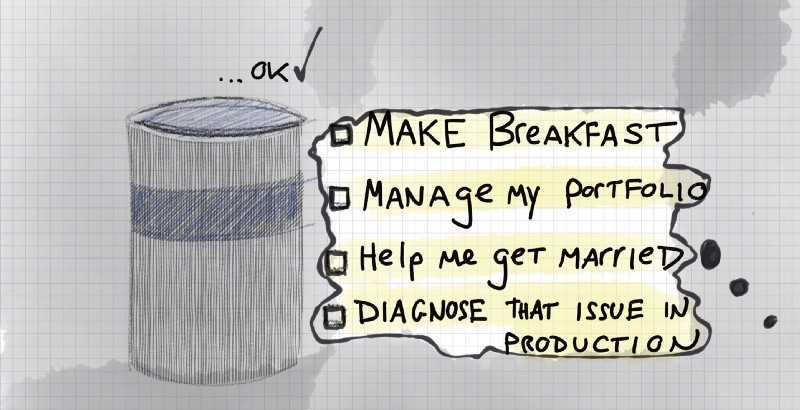

Bring Alexa (or a similar device) to work. Put it in the middle of the room. Imagine that Alexa is the interface for your product, and all interactions are voice interactions. Assume that the user IS NOT simply navigating your current product with their voice.

What might those exchanges look like? Some quick examples:

Alexa, tell me an interesting story about one of my friends Alexa, please double-check it is me the next time I ask for sensitive financial information. I’ll feel more secure. Alexa, can you get food delivered from one of our top three restaurants? And make it arrive when I’m projected to get home from work? Alexa, please monitor the support queue tonight. Do your best to answer questions. If something serious comes up, notify me immediately. Alexa, maximize my refund and prevent an audit! OK? Alexa, what is the key predictor of customer churn? And what can we do to fix that problem? Alexa, we’re leaving for vacation. If someone calls with a maintenance issue for one of our properties, find the lowest cost available vendor who can fix the issue.You’ll start to notice some interesting things.

- When do people ask questions vs. issue commands?

- How do they request information? If your product features charts and tables, how do users achieve their goals using only voice interactions?

- What gets left out?

- How much do you align your product thinking with your product’s interface? Can the two be decoupled? Give it a try. Who knows, this might be how people access your product sooner than you think. Is it still the same product? Hmmmm. :)