A random walk through the SEBoK glossary…

Have you checked out the Guide to the Systems Engineering Body of Knowledge (SEBoK)? I suggest you do. In this post, I am going to take a semi-random walk through the SEBoK glossary. Why? It’s an awesome resource, especially when you find yourself pondering the principles underpinning how we grapple with software development.

Note: I’ve likely butchered a bunch of SE concepts. Oh well! Gotta start somewhere.

What system do we have in mind?

Are we thinking about a hard system that can be “solved” solely with engineering methods, or a soft system “which require[s] learning in order to make improvements”? Most of us are operating in sociotechnical systems featuring “a combination of technical and human or natural elements”. In service systems, we maintain a “dynamic configuration of resources (people, technology, organizations and shared information)”. The question is… how quickly must we re-configure (adapt) those resources for our specific system context? A commercial service enterprise such as a bank..

…sells a variety of products as services to their customers; for example, this includes current accounts, savings accounts, loans, and investment management. These services add value and are incorporated into customer systems of individuals or enterprises. The service enterprise’s support system will also likely evolve opportunistically, as new infrastructure capabilities or demand patterns emerge. (link)To what degree is the system exhibiting emergent behavior that could not be predicted beforehand? For example, customers might use our product in unexpected ways or partners might form unexpected alliances and create new ecosystems. The history of AirBnb is filled with unexpected twists. If we expect no new behaviors to emerge, we can take a different approach to hardening the solution. Constructing a skyscraper might exhibit less emergent behavior than offering a new cloud computing service. Building a bridge might be “easier” than trying to design a new compensation structure in a coercive culture, or starting a crowd-sourced dog walking service. Weird right?

One of the challenges of project-centric thinking is that it frequently hinges on a “defined start and finish criteria”. The assumption is that scope is pinned to a set of known capabilities, fitness can be verified upon delivery, and that the design life has a known duration. “A viable system is any system organised in such a way as to meet the demands of surviving in the changing environment”, so the question really boils down to the environment and how quickly it is changing. If we cannot forecast the demands of the changing environment ahead of time, then we must take a more evolutionary approach. If forecasting is feasible, then we can take a more linear/sequential approach. Your environment will dictate how projects are competently governed, scoped, managed, and executed.

The debate over #NoEstimates and #NoProjects tends to ignore context like:

type of systems involved, their longevity, and the need for rapid adaptation to unforeseen changes, whether in competition, technology, leadership, or mission priorities (link).A commercial service enterprise is not playing the same game as a defense contractor. Chips are played in both games, but played in different ways. “Estimates” and “Projects” are not the actual problem. My guess is that the real problem is not correctly sensing the environment, and therefore selecting the wrong life-cycle model. Or there’s low safety, poor drift correction, entropy, and absent critical systems thinking. If the argument is that “practice X isn’t working”, why was the organization unable to see that or sense that?

In my current domain (B2B software-as-a-service help desk), customers pay rent to access our hosted product. The customer does not hand over their hard-earned cash in one go, grab the pair of shoes, and leave the store. 100,000 customers (and millions of their customers) access the same product suite. There is an expectation that we will continuously improve service delivery, and for the most part keep prices stable. Iterating on and optimizing existing capabilities is the norm. Often, the fastest way to learn is to deliver small increments to our customers and conduct in-process validation and acceptance sampling. But my domain is one of many. It is altogether different than a 1:1 SAP implementation, for example.

It is very hard to claim “% complete”.

One challenge with project planning and funding, is that it has the tendency to encourage premature convergence on a design, a fixed set of requirements, and an end-date. The customer — internal or external — wants to know what they’re “going to get” and “by when”. A better question is “what capabilities will be possible, and on what schedule”. Capabilities are the antithesis to premature solutioning. They represent…

- “current and future needs independent of current solution technology”

- “outcomes a user needs to achieve which connect the systems feature to the business or enterprise benefit”

- “describe current and future needs independent of current solution technology” In SaaS we may do a first pass design to 1) learn more about user needs, and 2) deliver an early “scaffolding” for a capability. Achieving the outcome is possible, but there are a lot of bells and whistles missing. Then we add more features, and enrich the design. And often, months or years later, we go back and change the delivery mechanism altogether. A great example is our use of machine learning to proactively answer support tickets (the capability) for some benefit (cost savings, increased customer satisfaction). For my current company, this is an ongoing mission. And it is possible because we can deliver, at scale, to all customers, whenever we want.

Finally we have attributes like agility, adaptability, resilience, robustness, and reliability. The business world is littered with companies that were purpose built for one environment, and then things changed (disrupted and threatened). But that doesn’t mean that all enterprises need the same brand of agility, adaptability, reliability, etc. Reliability means something different to Google, than it does to an oil rig. In his notes about Google SREs, Dan Luu writes:

Goal of SRE team isn’t “zero outages” — SRE and product devs are incentive aligned to spend the error budget to get maximum feature velocity. if a user is on a smartphone with 99% reliability, they can’t tell the difference between 99.99% and 99.999% reliabilitySo with that I close. It is all about the nature of the environment, and our ability to make some sense of, and thrive, in that environment.

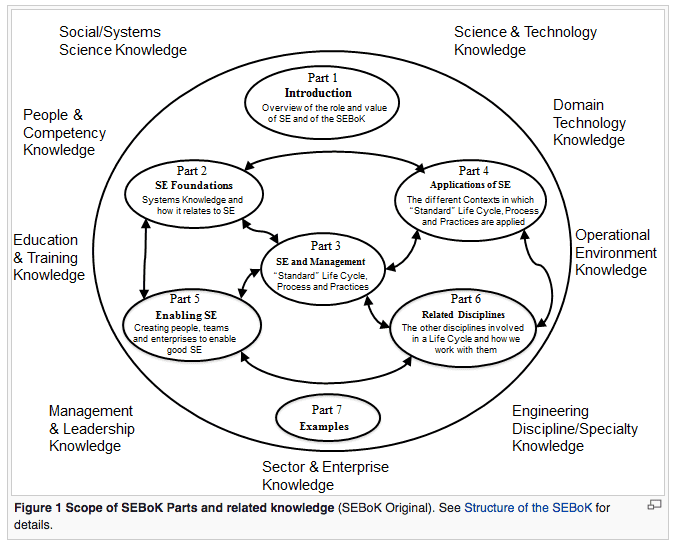

Have fun on the SEBoK site! A neat overview….

http://sebokwiki.org/wiki/Guide_to_the_Systems_Engineering_Body_of_Knowledge_(SEBoK)

http://sebokwiki.org/wiki/Guide_to_the_Systems_Engineering_Body_of_Knowledge_(SEBoK)